The Dead Internet is Not A Theory

Gallery

Last year, in January, Forbes published an article titled “The Dead Internet Theory, Explained,” written by Dani Di Placido. While this article didn’t invent the theory, it was one of the first papers that thoroughly explained what the theory was and where it came from. The Dead Internet theory, as described by Placido, is “the belief that the vast majority of internet traffic, posts and users have been replaced by bots and AI-generated content, and that people no longer shape the direction of the internet.” The article also provides examples of this from X (formerly Twitter) and its users’ frustration over AI-generated content. Placido ends the article by answering the question of whether the theory is real or not, saying: “Like the best conspiracy theories, the Dead Internet Theory fictionalized a depressing truth; the internet has been walled off by mega-corporations, and is now beginning to fill up with AI-generated sludge.” It’s been well over a year since this paper was published. Now, the dead internet is no longer a “theory.”

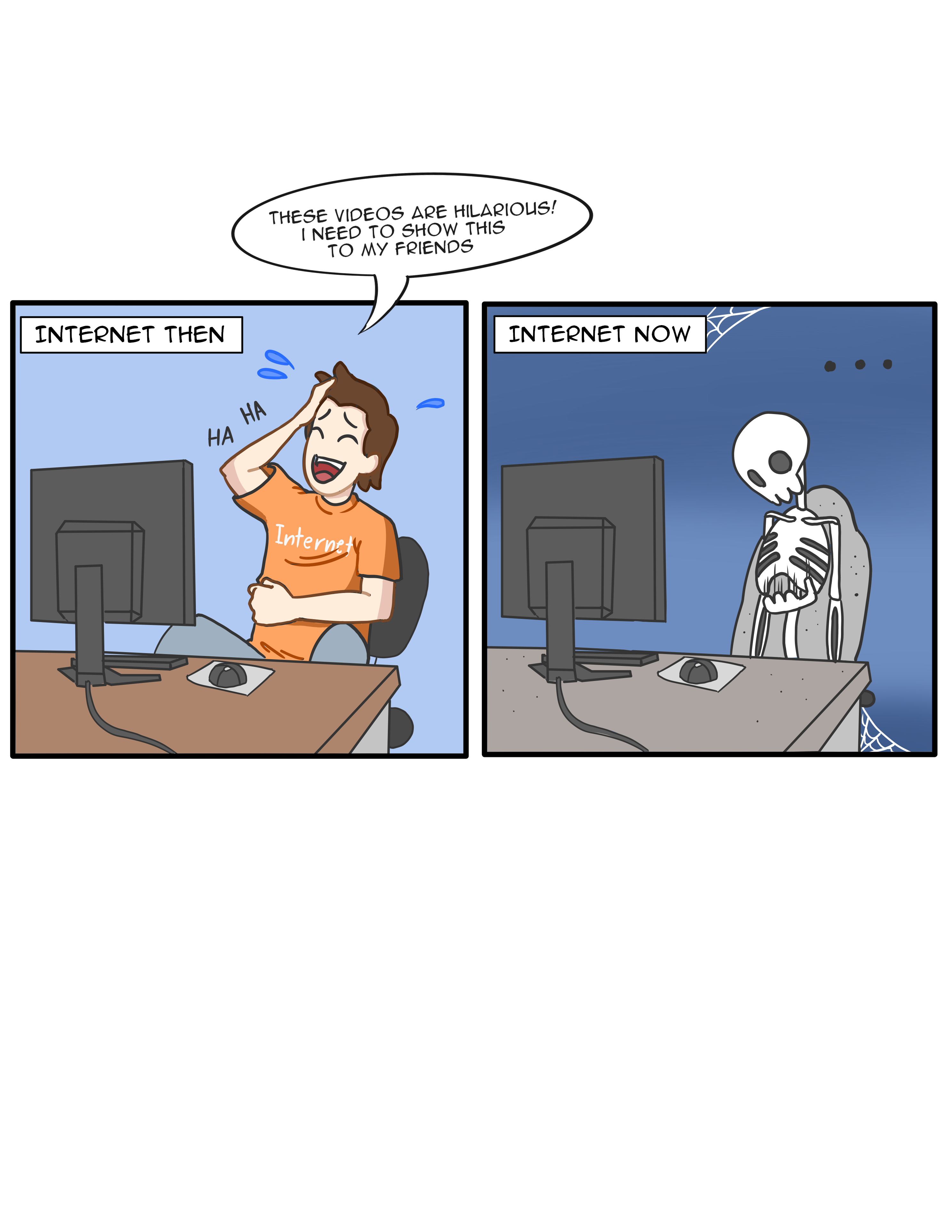

One of the biggest components of the dead internet is the reliance on AI. Over the last two to three years, there have been countless forum posts, YouTube videos, and TikToks discussing how AI is “ruining” or “killing” the internet and online culture. The most popular issues people cite are the popularity of AI-generated images on Facebook, the insane amount of bot accounts and posts on social media, and the unnecessary presence of AI tools on websites. In that original Forbes article, there are many tweets from people pointing out certain viral posts having a suspicious amount of likes and replies, with many of those replies being spam.

The 2024 Imperva Bad Bot Report reported that almost 50 percent of all internet traffic comes from “non-human sources.” Anthony Cuthbertson mentions this report in his article “Bots now make up the majority of all internet traffic” for The Independent, reporting “The researchers noted that the emergence of advanced artificial intelligence tools like OpenAI’s ChatGPT and Google’s Gemini have led to new cyber threats for web users.” and “These AI tools can be used to carry out spamming campaigns or even distributed denial-of-service (DDoS) attacks that knock sites offline by overwhelming them with fake traffic.” These issues have only gotten worse in the year since this report was released.

With the insane amount of AI online, simply logging onto social media means navigating an overwhelming amount of bots, fake content and traffic, and running the risk of your own account being overtaken by bots. Even knowing whether or not the content you’re interacting with is real or AI is only becoming more and more difficult to distinguish. So if so much of the internet is fake/non-human content, then is it even worth interacting with it? One consequence of the internet being overrun by AI and bots is the new age verification methods some social media platforms have been implementing. In July, England implemented new rules under the Online Safety Act. The new rules targeting children’s access to pornography mandate that certain websites must implement new age verification tools, such as sharing a legal ID or biometric data. Many have criticized these rules, calling them a blatant breach of privacy and unnecessary censorship.

Less than one week after these rules were put into effect, YouTube announced that they are expanding its pre-existing age verification tools in the US. According to the official YouTube blog, “We will use AI to interpret a variety of signals that help us to determine whether a user is over or under 18. These signals include the types of videos a user is searching for, the categories of videos they have watched, or the longevity of the account.” The blog then explains that if their AI flags a user as being under 18, then it will immediately disable personalized ads and limit what kind of content that viewer can see. It goes on to say that if your account is incorrectly flagged, then the only way to verify your age is to provide YouTube with your credit card or government ID.

Within days of this announcement, people were outraged and immediately started to point out the many holes in this policy. Many adults consume content that an AI may categorize as being for kids, like card collecting, video games, anime, and toy reviews. And similar to the Privacy Act of the UK, there is also a privacy concern, as giving our private information to a giant social media platform is a huge risk. Another concern is that giving AI the power to decide what is appropriate content may be giving up too much individual agency.

The undeniable impact the Internet has had on humanity and culture can’t be ignored. It gave people access to information from all over the world. It allowed people to connect over borders and oceans, and it created a culture that many people felt connected to and helped shape. But in the last couple of years, we have seen that culture slowly suffocate and die. The rise of bots and fake content has sucked the human interaction out of social media that used to be fun, and the restriction of information and content is becoming more and more normalized, further limiting human agency. Clearly, “the dead internet theory” is no longer a theory.